The ongoing concerns surrounding the YouTube Kids application – including its inability to completely filter out inappropriate content, as well as the way it blends content and commercial advertising in ways that may be difficult for children to understand – have now reached the U.S. Senate.

Today, Senator Bill Nelson (D-FL) took the floor to discuss the application and its issues, as well as the changes Google has made following pressure from Nelson and other consumer watchdog groups about the app’s content.

Nelson had sent Google a letter in June which raised questions about the content in YouTube Kids and the concerns about how the app handled advertising.

At the time, he wrote that while he applauded the company’s effort in creating a destination for kids focused on education and entertainment, Google needed to ensure that kids were not “unnecessarily exposed to inappropriate content, especially since parents may rely on the very notion that such a service is ‘for kids’ and, thus, safe for their unfettered usage,” the Senator wrote.

“Given Google’s considerable technical expertise, the company can presumably and readily deploy effective filtering tools to screen for unsuitable videos,” he added.

YouTube earlier this month rolled out an update to the Kids app in response to the ongoing complaints, but consumer watchdog groups claim the changes still haven’t gone far enough.

In the new version of the app, parents will now be shown a dialog box that informs them how videos are chosen as well as how they can be flagged when inappropriate ones get past the algorithms and human editors. Parents are also now asked to explicitly enable or disable the “search” feature, which is how kids were coming across a lot of the inappropriate content to begin with. (The feature let them search for videos that weren’t included in YouTube Kids’ curated selection.)

Meanwhile, YouTube’s position on advertising is a little more murky, saying that only “paid” ads have to meet a series of YouTube’s advertising guidelines. But user-uploaded content, while possibly commercial in nature, would not be flagged as ads.

Consumer watchdog groups, including Campaign for a Commercial-Free Childhood (CCFC) and Center for Digital Democracy (CDD), have pointed out that only a tiny fraction of the content on YouTube Kids is actually paid advertisements, but things like TV commercials, company-produced promotional videos, host selling and paid endorsements, aren’t necessarily being labeled as ads.

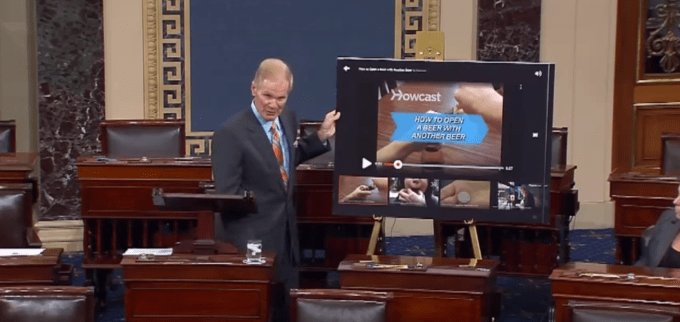

Today, Senator Nelson stressed that Google needed to do more about the content in YouTube Kids and presented to the Senate several examples of inappropriate videos found in the app.

Using a number of poster boards, he flipped through screenshots from YouTube Kids including one video with instructions on how to tie a noose; a “Howcast” video titled “How to open a beer with another beer”; a video about wine tasting tips; another “nursery rhyme for babies” video containing bad language; a video that instructed how to make sulfuric acid, and another that taught how to make toxic chlorine gas; and so on.

“This can go down to the toddler age…is that appropriate for young children?” Nelson asked the floor.

He then shared with the Senate Google’s official response to his inquires, which included, essentially, the changes we wrote about this month involving the way YouTube classifies paid advertising and the instructional information now provided to parents.

“I want to share with the Senate what I think is steps in the right direction, but it’s not enough,” said Nelson, before reading Google’s letter.

Though sometimes the tech community pokes fun at legislators’ lack of understanding of how technology works, Nelson actually made a few good points.

For example, why would a YouTube Kids application ever return video search results for a search for the keyword “beer?” Shouldn’t that be fairly simple to filter out without a parent’s intervention (i.e. by disabling the search feature entirely)?

Nelson also noted that Google didn’t answer his questions about the age range the YouTube Kids app targets, and it didn’t clearly answer a question about how long inappropriate videos remain online after they’re being flagged. (Google had told us “within hours.”)

Additionally, after reading Google’s response as to how it handles ads, Nelson quipped, “that’s nice. Now, how do we get those beer advertisements off of there?”

However, Nelson did miss an opportunity to note that because Google only enforces policy around “paid” ads, it’s not regulating the other advertising on the service which YouTube users are uploading themselves, often without proper disclosure. Instead, the Senator was far more concerned with the adult-oriented content found in the app.

Concluding his briefing, Nelson didn’t let Google off the hook, saying that while he appreciated the response to his inquiry, these changes were only a “first step.”

“If there’s a privilege of doing an app like this, then there must be accountability. And Google must accept that responsibility to be accountable,” he said.