So it was intriguing to hear that Apple had decided to try to help out in this area. Smartphones have a number of significant advantages: people use them already, users already accept that phones can gather information on everything from our location to our fingerprints, and it's possible to develop sophisticated software that runs on the devices. The problem has been that medical researchers' software talents tend to lie in building statistical models, not sophisticated user-facing software.

ResearchKit had the potential to make a decent GUI much more manageable. But Apple hadn't even revealed what was in the framework, so it was difficult to tell how much easier it would be—or even what problems it attempted to solve. Now that it's possible to take a look, it's obvious that Apple has created a simple programming paradigm that works in a number of contexts, one that takes the need to build a GUI almost entirely out of the developer's hands.

Under the hood

The fundamental unit of action in ResearchKit is a task. Tasks are used for pretty much everything ResearchKit does: providing informed consent, filling out surveys, and providing interactive tests for the user. Each of these items are broken down into a series of steps, and each step is handled by a task.

All tasks conform to a protocol called ORKTask, meaning that they have a standard suite of information and functions they can perform. One of those pieces of information is a property called "steps," which contains an ordered array of the individual components of the task. These steps can be as simple as asking a yes-or-no question (like "have you read and understood all of the above"), displaying a more complicated form, or even gathering data from things like voice or memory tests.

Each step comes with a view controller (ORKStepViewController), which mediates the interactions between the user interface and the underlying data. The task also has a view controller, which helps manage switching between the individual steps. It also stores the data returned by the users.

All of these pieces are in keeping with the standard conventions of Mac/iOS programming. The difference comes when making the actual interface. Typically, the developer needs to lay out the interface in Xcode and explicitly load the interface before displaying it. ResearchKit appears to eliminate that requirement. All you have to do is configure a step object (such as ORKQuestionStep) and add it to a task; generating the interface is handled by the underlying framework based on the type of step you've configured.

For example, there are a dozen different pre-configured ORKQuestionTypes, from a simple yes/no to things like multiple choice and numerical values, up through dates and free-form text entry. Alternately, you can choose from a set of pre-defined tasks that require more complicated user involvement, like doing a fitness test or spatial memory check.

Working with research kit should generally be a matter of configuring a collection of steps meant to get the data you need, grouping them into tasks, and then firing them off. Obviously, there may be circumstances where the built-in features won't work and some custom interface programming will be required. Apple encourages anyone who creates one of these classes to donate it back to the community.

ResearchKit and actual, uh, research

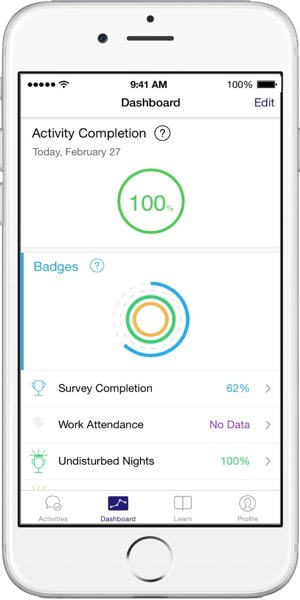

To get a bit more perspective on how useful this is, we talked to Corey Bridges, the CEO of a company called LifeMap Solutions, which has partnered with some academic institutions to develop some of the initial ResearchKit apps. Bridges told Ars that "ResearchKit streamlines the entire data collection and research process and enables more comprehensive data gathering than was possible with traditional studies."

The step/task paradigm would appear to work pretty well for a number of standard research practices. For example, informed consent will often involve displaying a lot of text followed by some simple questions, gathering demographic data will be a series of simple questions, etc. While there will be some specialization (the consent classes include one specialized for determining if the data gathered can be shared, for example), the idea seems to be to take the most common tasks and make them extremely simple to perform.

Beyond handling questions, most of the pre-built classes are focused on providing access to things that cell phones handle well. One example is a spatial memory game, where you have to remember where specific images are located after they've been obscured. Another is tracking location during a walk; yet another records speech. There's little doubt that developers will eventually think of other ways to make clever use of cellphone hardware.

But they're not the main audience for ResearchKit—it's the developers who wouldn't otherwise think of developing a cellphone app. Typically, they'd be involved in research projects that simply didn't have the budget for this kind of software, with custom UIs needed for consent, data gathering, etc.

More generally, simply opening up a large fraction of the smartphone-using population has some significant implications for research. "Typically, the number of participants in a clinical study is limited by geographical constraints and the cost of consenting and enrolling subjects in person," Bridges told Ars. "With ResearchKit, data can be captured in real time from a far larger, more geographically distributed, and more diverse subject pool at much lower cost." Bridges said that in the first week of its release, an app he worked on with Mt. Sinai researchers was able to enroll and obtain informed consent from more than 4,500 participants.

Probably the biggest feature that's left out of ResearchKit is the ability to communicate data back to the researchers. Apple doesn't want this data to ever see its servers, so it's up to the researchers to ensure secure transmission back to their home institutions.

We asked Bridges what he felt were the most helpful aspects of the framework. He returned to the informed consent. "E-consenting is groundbreaking because it will help medical researchers overcome one of the major barriers encountered in clinical research today," he said. "E-consent will enable us to reach all corners of the globe (wherever people have iPhones and Internet connectivity) to recruit research volunteers and conduct medical research with sample sizes that are orders of magnitude greater than previously possible, for a fraction of the cost."

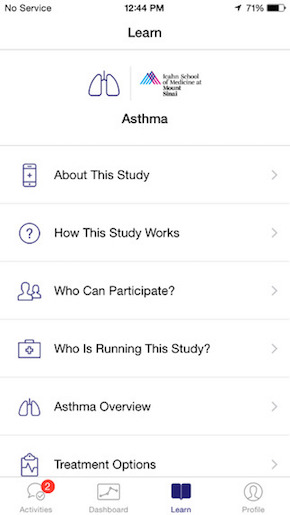

But Bridges was more excited about the sort of data that will come out of it. One of the apps his company worked on focuses on asthma, and he saw a lot of potential for aggregating app and other data. "For example, we can identify correlations between patient’s symptoms/exacerbations, precise geographic location (via GPS), and location-specific environmental data (such as local air quality and pollution)," Bridges said. "This type of granular data allows us to identify and evaluate the impact on asthma of air quality and point sources of allergens and pollution."

Listing image by LifeMap Solutions

reader comments

31