Apple has introduced significant improvements to the iPhone's camera capabilities in nearly every iteration of the device. And while rumors about the next-generation iPhone have largely concerned screen sizes and exotic materials, very little has been rumored about the camera thus far.

We decided to examine some potential technologies Apple could incorporate into the next iPhone to boost its photographic flair. Because the iPhone's camera is a combination of sensor and lens hardware controlled with software, we'll look at each part separately.

Hardware

The iPhone 4S camera hardware is already pretty capable, especially given the constraints of the device's size. It currently features an 8 megapixel sensor with backside illumination and a "full-well" design, technologies used to maximize its light gathering capabilities and dynamic range. Its five-element autofocus lens is sharp and provides even illumination across the image. And its "Hybrid IR" filter helps maximize color accuracy and sharpness.

Sensor

But that doesn't mean there isn't room for improvement. For instance, Apple could increase the megapixel count in the next iPhone, though doing so presents a number of tradeoffs. Typically, increasing pixel count results in smaller physical pixels for a given sensor size. And the smaller the pixel, the less sensitive it is to light.

In moving from 3MP of the iPhone 3GS to 5MP in the iPhone 4, Apple increased the sensor size in order to maintain a 1.75µm pixel pitch. It also added backside illumination—effectively flipping the sensor upside down so that more light reaches the critical photodiode components—to increase its ability to capture images in low light. This brought a marked improvement in dynamic range and low-light shooting.

In moving from the iPhone 4 to the iPhone 4S in 2011, Apple increased the pixel count to 8MP. While this significantly reduced the pixel pitch, resulting in smaller photodiodes, Apple was able to actually improve light gathering ability 73 percent by incorporating advanced BSI with a "full well" design, which maximizes photodiode area within each pixel.

With Apple already using available technologies to mitigate the tradeoffs that come with increasing pixel counts, it may not be able to increase the iPhone camera's resolution without considering larger sensors. The iPhone 4 and 4S use a 1/3.2" size sensor, and increasing the resolution further at that size would push the pixel pitch to the limits of acceptable quality.

However, long-time sensor supplier OmniVision recently announced a 16MP 1/2.3" sensor with improved BSI technology. Aside from the huge leap in resolution, it maintains a similar pixel pitch to that of the current iPhone 4S sensor. It also offers massively high video resolution—known as "4K2K" or "quad full high definition" (QFHD) at 3840x2160.

Such an increase in resolution isn't on par with the massive 41MP offered in Nokia's new PureView 808 smartphone, but it could allow Apple to offer a similar "oversampling" mode, which trades resolution for improved color accuracy and reduced noise.

Still, significantly increasing the resolution involves using a larger sensor, and would begin to put a strain on the limited storage capacity of the entry-level iPhone models. Apple may not be ready to account for that in the next iPhone iteration.

Sensor patterns

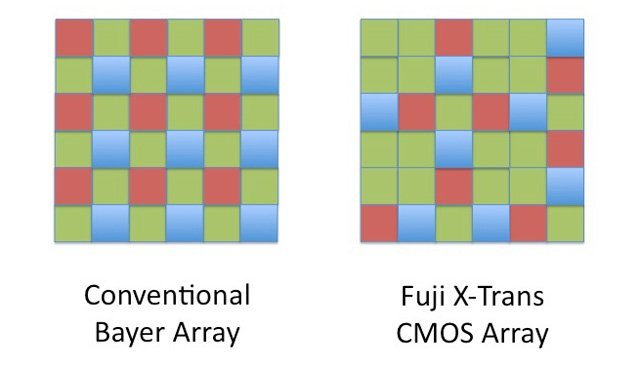

One thing that would not require additional space, however, is an alternate filter array pattern. Most color image sensors use a common Bayer filter, which alternates green and red filters on one line, and green and blue filters on the next. Full RGB information for each pixel is interpolated from surrounding pixels, which means values aren't always perfect. (There are more green pixels than red and blue because green wavelengths also tend to have the most sharpness and detail.) To help avoid false colors and color moiré patterns, most sensors also have an anti-aliasing filter which adds a slight amount of blur.

Fuji has experimented with alternate filter arrays, most recently incorporating an alternative RGB pattern in its X1 Pro camera. The pattern ensures that each row and column has both red and blue values, improving the RGB interpolation. The side effect is reduced false color and moiré effects, enough so that Fuji eliminated the anti-aliasing filter, generally resulting in sharper images.

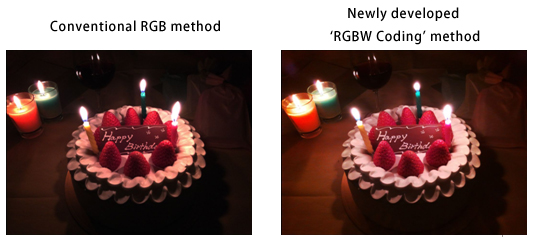

Another alternate sensor pattern that several companies have been developing is "RGBW," which adds unfiltered "white" pixels to the usual RGB array. There are several variations of this technique being designed and patented by the likes of Panasonic and Sony, but the basic improvement is that the unfiltered "white" pixels give improved luminance response, increasing dynamic range. Color accuracy can lose out a bit, but for sufficiently high resolutions—even 8MP—the effect is negligible, while the improvement in dynamic range and low-light response is impressive.

Folding optics

Most smartphones have a single focal length lens. While Apple added a "digital zoom" function in 2010 to the iPhone 4, this merely crops the middle section of the image and enlarges it. Doing so results in a decidedly less sharp image, so some iPhone snappers have longed for a proper optical zoom lens.

Apple would not be able to squeeze such a zoom into the current thickness of the iPhone, and we don't think Apple would make the phone thicker to accommodate one. However, it could go with a reflex zoom design, which has been used successfully on several thin digital cameras, such as the Nikon Coolpix S100 or Pentax Optio WG-2.

Effectively, light would come in a front element in its current position, reflected downward using a mirror or prism, and the rest of the lens would be inside the iPhone's casing.

Space is at a premium inside the iPhone, as all the components are tightly compacted. (Most of the internal space is occupied by the battery.) However, it's possible that more efficient components could give Apple just enough room to squeeze folded optics inside.

Xenon flash

The iPhone, like many smartphones, relies on a relatively bright LED to act as a "flash" for lighting in low-light conditions. The quality of an LED light is, shall we say, not very good.

Apple could improve things by incorporating a proper xenon flash tube. A xenon flash is typically viewed as a luxury for smartphones, because they require a large capacitor to fire. Finding space inside the iPhone for the necessary power electronics is likely a very low priority, but it's clear that Apple's engineers have some serious chops in this area.

One downside with this approach, however, is that a xenon flash can't provide continuous light for video. So Apple would either have to include both, ditch the LED altogether, or just stick with the LED, which can work for both purposes.

Software

In addition to improving the hardware, however, Apple could also choose to add one or more software improvements to boost the iPhone's camera performance. For instance, Apple added high dynamic range (HDR) shooting to the iPhone 4 as a software update in iOS 4.1. Apple could bring additional improvements to its software in iOS 6 to power advances in the next-generation iPhone. A side benefit of this approach is that some or all of the improvements could be back-ported to previous iPhone models as well.

Super resolution

Apple could improve the resolution of its existing iPhone camera hardware by using a technique called "super resolution." This technique commonly combines multiple exposures of the same scene with slight variations (almost guaranteed with a handheld shot) to create images that have more detail and resolution than what is possible from the sensor alone.

A third-party app called Cortex Camera is available for the iPhone 4S, which uses super resolution techniques to significantly improve sharpness and detail of images while also reducing noise. Apple could do one better by incorporating the option into the camera software itself, and offering APIs to allow third-party apps to optionally capture super resolution images.

Multiple tap to focus

A recent patent application from Apple shows that it has considered improvements to its "tap-to-focus" feature. By default, the iPhone will focus on the center of the image, and can optionally use facial recognition to focus on people. Users can also tap anywhere within the image to force the iPhone's camera to focus on a particular spot.

Apple suggests that additional gestures could allow users to select more than one focus point, forcing the lens to focus at an intermediary distance to get multiple subjects as sharply focused as possible. Such a system could also be used to bias the exposure system for multiple subjects, choosing a "compromise" that looks as good as possible for the given lighting conditions.

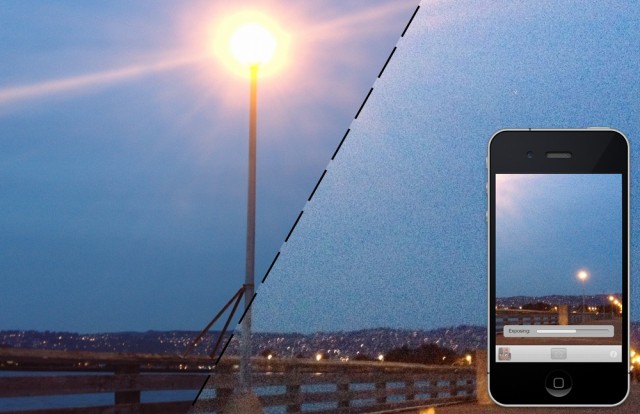

Manual exposure compensation

There's also some pretty low-hanging fruit for Apple to grab as far as improvements to its camera software, such as the addition of an exposure compensation control. The current tap-to-focus control will adjust the auto exposure based on the selected focus area, and sometimes that can bias the exposure for better results. However, we have run into a number of situations where the autoexposure results in an image that's either too light or too dark. Exposure compensation would make it possible to dial it in to "just right."

Apple prides itself on the simplicity and ease-of-use of its software, but we don't think that means that options for advanced users should be left out entirely. A pop-up slider similar to the current zoom control would offer an intuitive way to force the camera to make images lighter or darker as needed.

None of the above?

Given the other rumored changes coming to the next iPhone, including a larger screen, improved processor, LTE capability, and perhaps even a radical design change, Apple might instead choose to hold off any major improvements to the camera hardware. The current hardware in the iPhone 4S is compact and capable of impressive image quality that consistently ranks the iPhone as the most-used smartphone camera.

It may end up being smarter for Apple to keep using a solution that's proven to work, saving changes for 2013 or beyond. But as usual, we won't know the truth until the next iPhone actually makes its debut later this year.

Listing image by Apple, Inc.

reader comments

82