Apple's push to increase the quality of songs distributed via iTunes has been formally realized in the company's Mastered for iTunes program—but does it really make music sound better?

After our original report on the Mastered for iTunes program, some readers were skeptical that anything could be done to make a compressed AAC file sound comparable to uncompressed, 16-bit 44.1kHz CD standard audio. Others believed users should have access to the original 24-bit 96kHz files created in the studio for the best sound. Finally, some readers suggested that few people can actually tell the difference between iTunes Plus tracks and CD audio, so why bother making any effort to improve iTunes quality?

British recording engineer Ian Shepherd called the entire process of specially mastering audio files for iTunes to sound more like the CD version simple "BS."

"This is where I believe the real slight-of-hand is being pulled," Shepherd told CE Pro. '"Optimizing' for lossy codecs shouldn't be necessary, and my test shows that [in the case of Red Hot Chili Peppers, at least] it isn't necessary."

Some musicians and record executives have recently bemoaned the fact that what ends up on a fan's iPod or iPhone is of arguably much lower quality than what is laid down on tape or hard drives in the studio. While some players in the industry have pushed for higher resolution downloads, Apple's current solution involves adhering to long-recognized—if not always followed—industry best practices, along with an improved compression toolchain that squeezes the most out of high-quality master recordings while still producing a standard 256kbps AAC iTunes Plus file.

Shepherd applauded Apple's technical guidelines, which encourage mastering engineers to use less dynamic range compression, to refrain from pushing audio levels to the absolute limit, and to submit 24/96 files for direct conversion to 16/44.1 compressed iTunes Plus tracks. However, he doubted that submitting such high quality files would result in much difference in final sound quality. Shepherd's conclusions led CE Pro to claim that Mastered for iTunes is nothing more than "marketing hype."

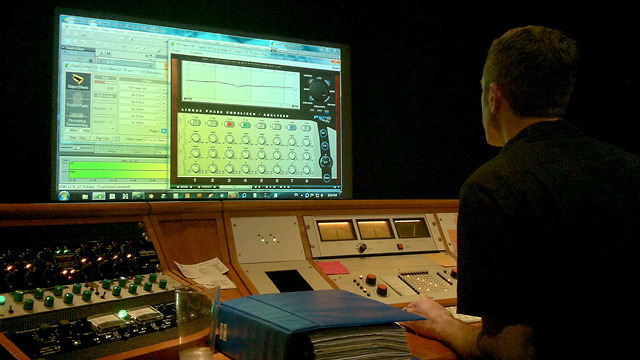

So, we set out to delve deeper into the technical aspects of Mastered for iTunes. We also attempted to do some of our own testing to see if there was any difference—good or bad—to be had from following the example of Masterdisk.

We enlisted Chicago Mastering Service engineers Jason Ward and Bob Weston to help us out, both of whom were somewhat skeptical that any knob tweaking could result in a better iTunes experience. We came away from the process learning that it absolutely is possible to improve the quality of compressed iTunes Plus tracks with a little bit of work, that Apple's improved compression process does result in a better sound, and that 24/96 files aren't a good format for consumers.

More is less

Human hearing, mathematics, and audio equipment all have their limits. Combined, they make selling direct-from-the-studio, 24-bit 96kHz (some files come in sampling rates as high as 192kHz) audio files impractical, if not outright useless.

Let's examine the basic aspects of digitized audio. Files are typically specified with a bit depth and a sampling frequency, such as the 16-bit 44.1kHz standard used for audio CDs. The bit depth determines the dynamic range of an audio recording, from the quietest sounds to the loudest. The sampling rate determines the maximum frequency that can be reproduced accurately and without distortion.

Using 16 bits for each sample allows a maximum dynamic range of 96dB. (It's even possible with modern signal processing to accurately record and playback as much as 120dB of dynamic range.) Since the most dynamic modern recording doesn't have a dynamic range beyond 60dB, 16-bit audio accurately captures the full dynamic range of nearly any audio source.

Moving to 24 bits increases the dynamic range to 144dB. Assume that you have audio equipment capable of reproducing the entire 144dB range of sound—and we're not aware of any equipment available to consumers which can do so—and that you have turned it up loud enough to hear that full range. The loudest sounds would be loud enough to cause permanent hearing loss or possibly even death.

The maximum frequency that can be captured in a digital recording is exactly one-half of the sampling rate. This fact of digital signal processing life is brought to us by the Nyquist-Shannon sampling theorem, and is an incontrovertible mathematical truth. Audio sampled at 44.1kHz can reproduce frequencies up to 22.05kHz. Audio sampled at 96kHz can reproduce frequencies up to 48kHz. And audio sampled at 192kHz—some studios are using equipment and software capable of such high rates—can reproduce frequencies up to 96kHz.

However, human ears have a typical ideal frequency range of about 20Hz to 20kHz. This range varies from person to person—some people can hear frequencies as high as 24kHz, while others much lower—and the frequency response of our ears also diminishes with age. For the vast majority of listeners, a 44.1kHz sampling rate is capable of producing all frequencies that they can hear, and then some.

Furthermore, attempting to force high-frequency, ultrasonic audio files through typical playback equipment actually results in more distortion, not less.

"Neither audio transducers nor power amplifiers are free of distortion, and distortion tends to increase rapidly at the lowest and highest frequencies," according to Xiph Foundation founder Chris Montgomery, who created the Ogg Vorbis audio format. "If the same transducer reproduces ultrasonics along with audible content, any nonlinearity will shift some of the ultrasonic content down into the audible range as an uncontrolled spray of intermodulation distortion products covering the entire audible spectrum. Nonlinearity in a power amplifier will produce the same effect."

Given these facts, 24/96 audio is overkill for typical playback scenarios and thus is not generally sold direct to consumers. But using such high resolution audio is useful in the studio, where multiple audio tracks are processed using digital effects software and then mixed together to create a single two-channel stereo track. The additional "headroom" provided by the extra dynamic range and frequency response lets recording engineers use various signal processing effects and mixing strategies while leaving room to filter out the noise typically created by such processes.

Apple recommends that audio engineers record and submit the highest quality audio files they can. This typically means 24/96 files either recorded digitally or sampled from analog masters. But if such audio files aren't useful for listening, why does Apple request them?

reader comments

373