A patent (number 20120036433) at the U.S. Patent & Trademark Office shows that Apple is looking into three-dimensional interfaces for at least some of its products. The patent is for 3D user interface effects on a display by using properties of motion.

The techniques disclosed use a compass, MEMS accelerometer, GPS module, and MEMS gyrometer to infer a frame of reference for a hand-held device. This can provide a true Frenet frame, i.e., X- and Y-vectors for the display, and also a Z-vector that points perpendicularly to the display. In fact, with various inertial clues from accelerometer, gyrometer, and other instruments that report their states in real time, it is possible to track the Frenet frame of the device in real time to provide a continuous 3D frame-of-reference.

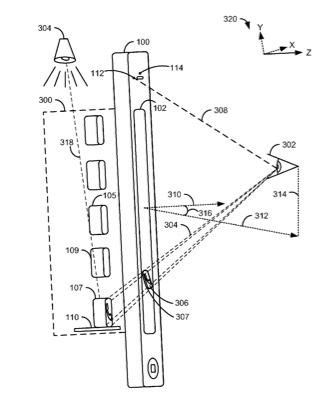

Once this continuous frame of reference is known, the position of a user’s eyes may either be inferred or calculated directly by using a device’s front-facing camera. With the position of the user’s eyes and a continuous 3D frame-of-reference for the display, more realistic virtual 3D depictions of the objects on the device’s display may be created and interacted with by the user. The inventors are Mark Zimmer, Geof Stahl, David Hayward and Frank Doepke.

Here’s Apple’s background on the invention: “It’s no secret that video games now use various properties of motion and position collected from, e.g., compasses, accelerometers, gyrometers, and Global Positioning System (GPS) units in hand-held devices or control instruments to improve the experience of play in simulated, i.e., virtual, three dimensional (3D) environments. In fact, software to extract so-called ‘six axis’ positional information from such control instruments is well-understood, and is used in many video games today.

The first three of the six axes describe the ‘yaw-pitch-roll’ of the device in three dimensional space. In mathematics, the tangent, normal, and binormal unit vectors for a particle moving along a continuous, differentiable curve in three dimensional space are often called T, N, and B vectors, or, collectively, the ‘Frenet frame,’ and are defined as follows: T is the unit vector tangent to the curve, pointing in the direction of motion; N is the derivative of T with respect to the arclength parameter of the curve, divided by its length; and B is the cross product of T and N. The ‘yaw-pitch-roll’ of the device may also be represented as the angular deltas between successive Frenet frames of a device as it moves through space. The other three axes of the six axes describe the ‘X-Y-Z’ position of the device in relative three dimensional space, which may also be used in further simulating interaction with a virtual 3D environment.

“Face detection software is also well-understood in the art and is applied in many practical applications today including: digital photography, digital videography, video gaming, biometrics, surveillance, and even energy conservation. Popular face detection algorithms include the Viola-Jones object detection framework and the Schneiderman & Kanade method. Face detection software may be used in conjunction with a device having a front-facing camera to determine when there is a human user present in front of the device, as well as to track the movement of such a user in front of the device.

“However, current systems do not take into account the location and position of the device on which the virtual 3D environment is being rendered in addition to the location and position of the user of the device, as well as the physical and lighting properties of the user’s environment in order to render a more interesting and visually pleasing interactive virtual 3D environment on the device’s display.

“Thus, there is need for techniques for continuously tracking the movement of an electronic device having a display, as well as the lighting conditions in the environment of a user of such an electronic device and the movement of the user of such an electronic device–and especially the position of the user of the device’s eyes. With information regarding lighting conditions in the user’s environment, the position of the user’s eyes, and a continuous 3D frame-of-reference for the display of the electronic device, more realistic virtual 3D depictions of the objects on the device’s display may be created and interacted with by the user.”