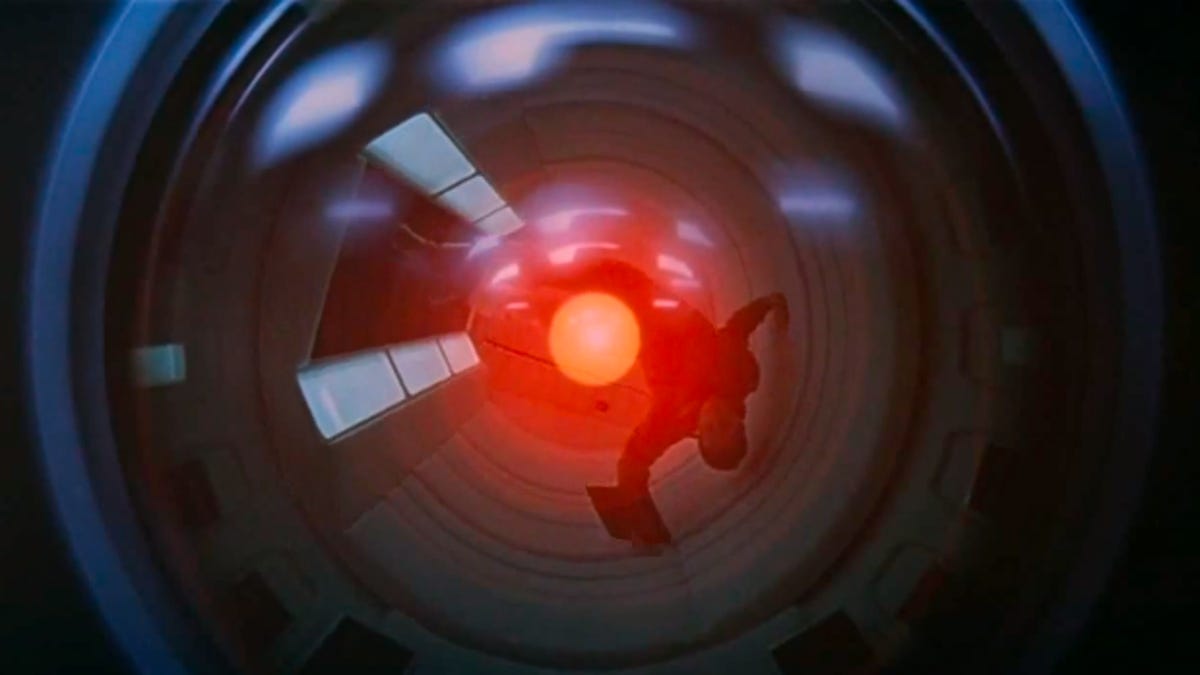

Will AI need therapy in the future?

Because maybe "turning it off and on again" isn't a long term solution!

Considering we live in a world where MIT researchers created Norman, a psychopathic AI, it makes sense -- perhaps we'll soon occupy a future where we have to "treat" AI for potential personality disorders, in much the same way we treat human beings.

That's the position of researchers from Kansas State University. They believe that as artificial intelligence grows in complexity there's a real chance it could fall prey to "challenges of complexity" -- meaning personality disorders. Perhaps AI could benefit from decades of knowledge in how we treat and help human beings suffering with PTSD, depression or psychosis?

Cognitive Behaviour Therapy, for example, tries to systematically change behaviour patterns in human beings. Why couldn't similar logistical processes be used to change the behaviour of rogue AI in the future? The argument: Perhaps we should consider using similar frameworks when thinking about AI, identifying the misbehaviour, diagnosing the disorder, and then figuring out a system of treatment to fix the issue.

As for the treatment? The researchers suggest two starting points. The first: "correctional training", which is less invasive and mirrors cognitive behaviour therapy. The second is closer to medication therapy: manipulating reward signals via external means as a way to fundamentally alter AI behaviour. "When a disorder is diagnosed in an AI agent," explains the paper, "it is not always feasible to simply decommission or reset the agent."

In short: You can't just turn it off and on again.

The paper is just a starting point, but it's fascinating. When you consider the anxiety surrounding artificial intelligence it's an intriguing topic (I can't believe I've written a whole article on AI without a single "Skynet" joke). It's hard to imagine a day when we sit AI down on a leather couch and start getting Freudian, but maybe techniques that work on humans will one day be useful when dealing with some of the broader issues AI will no doubt have to deal with in the future.

'Hello, humans': Google's Duplex could make Assistant the most lifelike AI yet.

Cambridge Analytica: Everything you need to know about Facebook's data mining scandal.

Online vs. in-person therapy: Cost, confidentiality, accessibility and more