IBM now has a functional 56-qubit quantum computer simulated in a classical supercomputer. IBM simulated 56 qubits with only 4.5 terabytes.

It was previously believed that current supercomputers would not be able to simulate more that 49 qubits.

They divide the simulation task into many parallel pirces, which allowed them to use the many processors of a supercomputer simultaneously. thiis enabled the efficiency needed to simulate a 56-qubit quantum computer.

“IBM pushed the envelope,” says Itay Hen at the University of Southern California. “It’s going to be much harder for quantum-device people to exhibit [quantum] supremacy.”

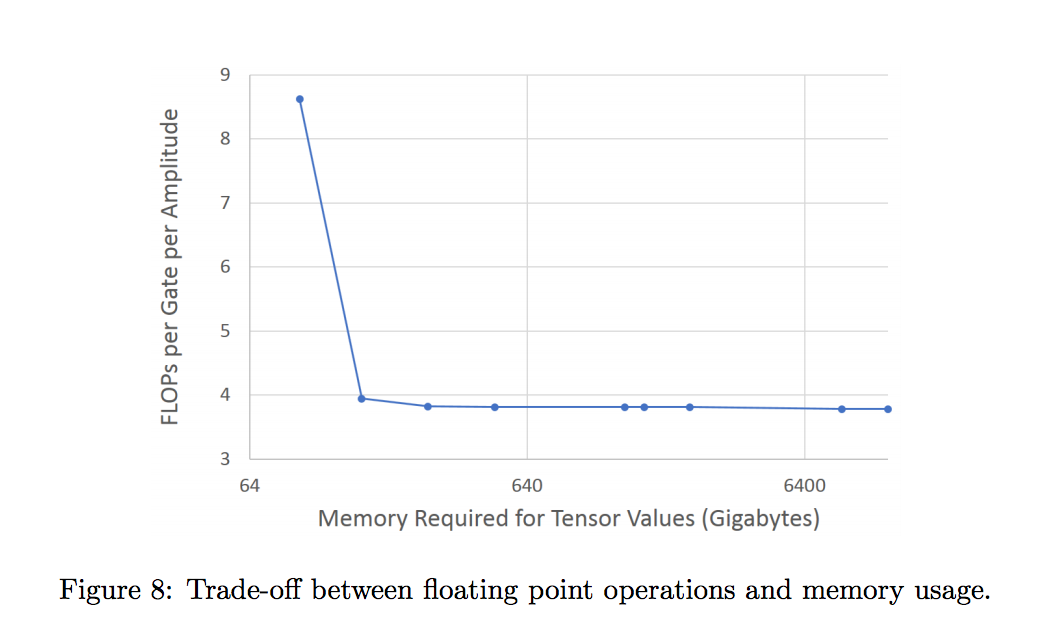

The final quantum state is calculated in slices, where each slice involves the calculation of 2^38 amplitudes and a total of 2^11 slices were calculated. In the case of the 56-qubit circuit, only one slice with 2^37 amplitudes was calculated out of the 2^19 such slices that defined the final quantum state.

All experimental results were obtained over the course of two days on Lawrence Livermore National Laboratory’s Vulcan Blue Gene/Q supercomputer. This time included the time to setup the experiments described in the paper, as well as that to conduct additional experiments beyond the scope of what is described here. Memory usage required strong scaling to 4,096 nodes, with 64 TB of memory, for each (parallel) calculation.

Conclusions and Future Work

They addressed a fundamental question in the engineering of more powerful computing devices. Our key contribution is a new circuit simulation methodology that allowed us to break the 50-qubit barrier, previously thought to be the limit beyond which quantum computers cannot be simulated. Our results confirm the expected Porter-Thomas distribution as the distribution of the outcome probabilities for universal random circuits. This expectation was confirmed per slice across the entire quantum state produced by the 49-qubit circuit, and for a single slice of the quantum state resulting from the 56-qubit circuit. As mentioned in the introduction, the ability to calculate quantum amplitudes for measured outcomes is important to assess the correct operation of quantum devices; however, this application was not explored in the present study.

If a 49-qubit random circuit that was previously thought to be impossible to simulate on a classical computer can now be simulated in slices where the data structures fit comfortably within the memory available on high-end servers, then the possibility now exists to perform routine calculations of amplitudes for measured outcomes of such quantum devices without requiring access to supercomputers. They plan to fully explore the extent to which this is possible to do both in terms of numbers of qubits and depths of circuits. Because tensor slice calculations can also be embarrassingly parallelized, circuits whose output amplitudes can now be calculated on high-end servers can also, in principle, be simulated in their entirety by distributing the calculations across a sufficiently large number of high-end servers. No longer are these calculations limited by the resources available on specific supercomputers. One could, in principle, run such simulations on the Cloud. For this class of circuits, whose extent still

needs to be determined, the question of whether they can be fully simulated has now become more a question of economics than one of a physical plausibility. Beyond the aforementioned computational utility on a complete circuit scale, tensor slicing can be instrumental for algorithmic analysis, as well as in simulation of active control subcircuits, where measured slice outcomes can serve as dynamic input to the remaining part of a circuit.

Arxiv – Breaking the 49-Qubit Barrier in the Simulation of Quantum Circuits

With the current rate of progress in quantum computing technologies, 50-qubit systems will soon become a reality. To assess, refine and advance the design and control of these devices, one needs a means to test and evaluate their fidelity. This in turn requires the capability of computing ideal quantum state amplitudes for devices of such sizes and larger. In this study, we present a new approach for this task that significantly extends the boundaries of what can be classically computed. We demonstrate our method by presenting results obtained from a calculation of the complete set of output amplitudes of a universal random circuit with depth 27 in a 2D lattice of [Math Processing Error] qubits. We further present results obtained by calculating an arbitrarily selected slice of [Math Processing Error] amplitudes of a universal random circuit with depth 23 in a 2D lattice of [Math Processing Error] qubits. Such calculations were previously thought to be impossible due to impracticable memory requirements. Using the methods presented in this paper, the above simulations required 4.5 and 3.0 TB of memory, respectively, to store calculations, which is well within the limits of existing classical computers.

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

All the articles on this site suck!!!!! They are just nonsensical clippings and plagerized text from other sites!

I don’t think demonstrating that a massive deployment of conventional computing resources can eventually tell you want a 56 bit quantum computer the size of a thermos, (The size of a stamp IN a thermos, really.) would do in a fraction of a second, really demonstrates that conventional computers are going to be able to hold their own.

It might not technically be quantum supremacy, but quantum “beating the snot out of” is pretty meaningful.

I got lost at “parallel pirces”……

maybe you should turn spell check on and have an editor read articles before publishing

Beyond just Quantum Computing – can we implement Quantum Feedback Control Loops to help us make use of states characterized by randomness, akin to the infamous Maxwell’s Demon?

So – another potential “killer app” for distributed computing…

Just hook into a suitable business model, like cryptocurrency mining or BOINC and free up the vast computing resources on the planet for something useful.