Microsoft's new record: Speech recognition AI now transcribes as well as a human

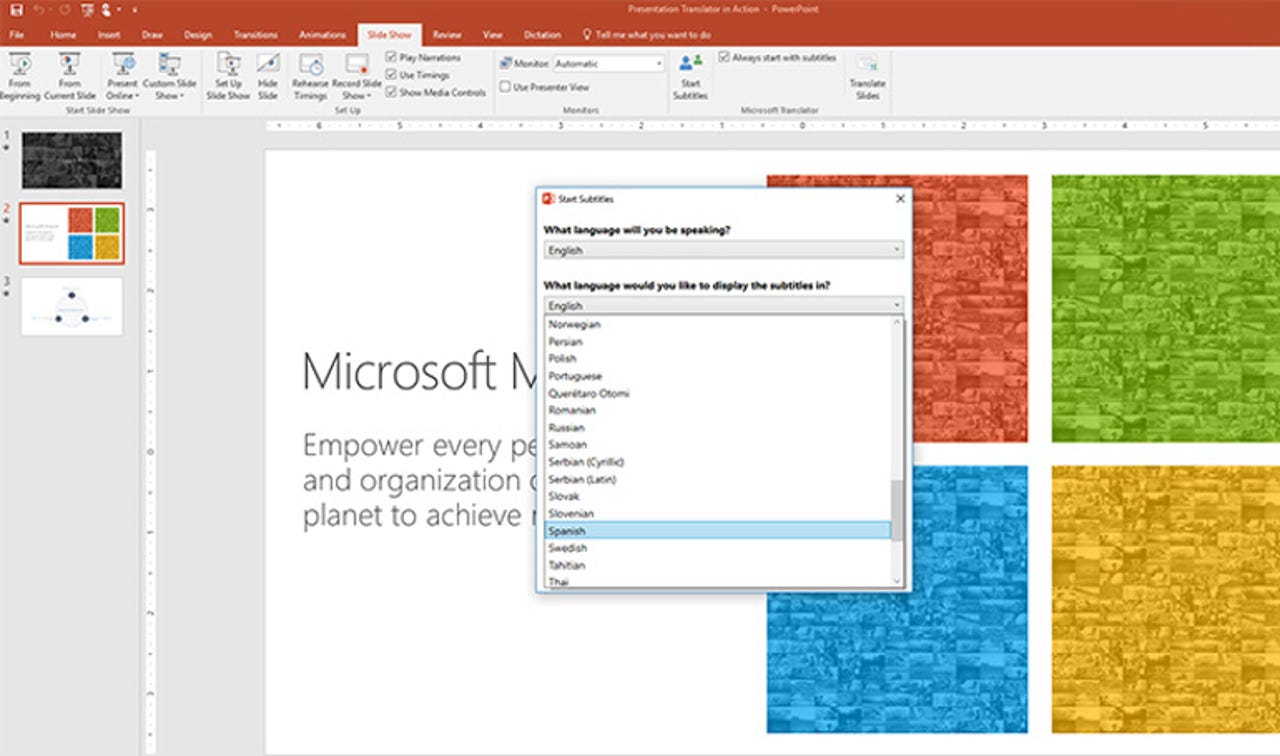

Microsoft is applying its work in speech recognition in services such as Speech Translator, which aims to translate presentations in real time for multilingual audiences.

A speech-recognition system developed by Microsoft researchers has achieved a word error rate on par with human transcribers.

More Microsoft

Microsoft on Monday announced that its conversational speech-recognition system hit an error rate of 5.1 percent, matching the error rate of professional human transcribers.

Microsoft last year thought its 5.9 percent error rate had achieved human parity, but IBM researchers argued that milestone would require a system achieving a rate of 5.1 percent, slightly lower than its lowest word error rate of 5.5 percent.

IBM's study of human transcribers allowed several humans to listen to the conversation more than once, and picked the result of the best transcriber.

As with last year's test, Microsoft's system was measured against the Switchboard corpus, a dataset consisting of about 2,400 two-sided telephone conversations between strangers with US accents.

The test involves transcribing conversations between people discussing a range of topics, from sports to politics, but the conversations are more formal in nature.

Unlike last year's test Microsoft didn't test its system against another dataset called CallHome, which includes open-ended and more casual conversations between family members. CallHome error rates are more than double Switchboard tests for both humans and machines.

Still, Microsoft did manage to shave 12 percent off last year's Switchboard results after tweaking its neural-net acoustic and language models.

"We introduced an additional CNN-BLSTM (convolutional neural network combined with bidirectional long-short-term memory) model for improved acoustic modeling. Additionally, our approach to combine predictions from multiple acoustic models now does so at both the frame/senone and word levels," said Xuedong Huang, a technical fellow at Microsoft.

"Moreover, we strengthened the recognizer's language model by using the entire history of a dialog session to predict what is likely to come next, effectively allowing the model to adapt to the topic and local context of a conversation."

Despite the new milestone, Microsoft acknowledges machines still find it tough to recognize different accents and speaking styles, and don't perform well in noisy conditions.

And although Microsoft was able to train its models to detect a context to transcribe a conversation more accurately, it has a way to go before it can train a computer to actually understand the meaning of a conversation.

Google earlier this year announced its systems achieved a 4.9 percent word error rate, though it's not known what test it used.

Related coverage

IBM vs Microsoft: 'Human parity' speech recognition record changes hands again

Google's strides in computer vision leads to Google Lens feature

Microsoft's newest milestone? World's lowest error rate in speech recognition

Microsoft has leapfrogged IBM to claim a significant test result in the quest for machines to understand speech better than humans.

Read more on speech recognition

- Kakao, Hyundai to cooperate on in-car speech recognition

- Samsung acquires text-to-speech startup Innoetics

- Microsoft's Dictate add-in brings speech-to-text support to Office apps

- Google bets on AI-first as computer vision, voice recognition, machine learning improve

- How to use speech recognition to improve productivity on your smartphone (TechRepublic)

- This keyboard is designed entirely for speech recognition (CNET)